Tree of Thoughts

Section 1: An Introduction to Artificial Intelligence and Large Language Models

Artificial Intelligence (AI) serves as a beacon, illuminating the path towards a technologically-driven future. At the heart of this revolution resides the domain of Large Language Models (LLMs), embodying the colossal strides taken in AI’s cognitive and communicative prowess.

AI is an umbrella term for the myriad technologies that facilitate machines in emulating human intelligence. This includes activities such as reasoning, learning, problem-solving, perception, and most notably, language understanding. It’s a vibrant mosaic, comprising multiple subfields like machine learning, neural networks, computer vision, and natural language processing (NLP).

One of the most fascinating components of human intelligence that AI seeks to replicate is our capacity for language. This presents both opportunities and challenges, the exploration of which has given birth to LLMs. These models, developed within the NLP domain, are trained on massive datasets of text and demonstrate an uncanny ability to generate text that aligns closely with human-like language structures.

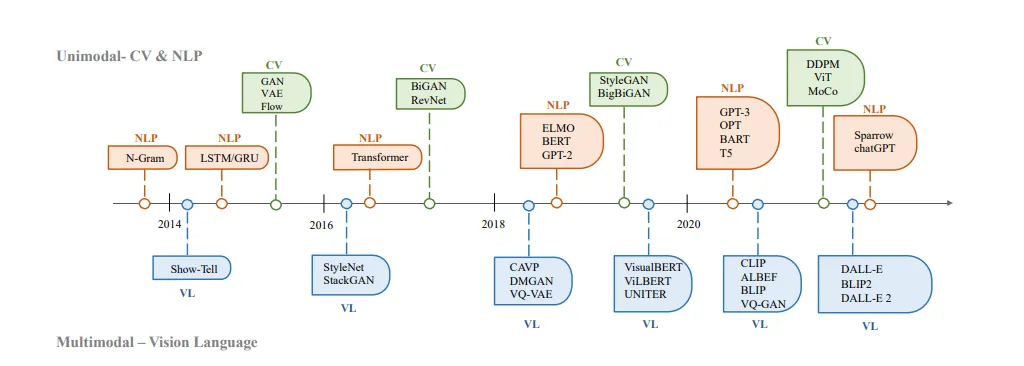

Figure 1 shows an evolution of AI and Large Language Models

OpenAI’s GPT-3 has been a trailblazer in this domain, showcasing the potential that LLMs hold. With a staggering 175 billion learning parameters, GPT-3 has demonstrated an unprecedented proficiency in generating contextually relevant, coherent text. Its capabilities extend beyond mere text generation, encompassing answering queries, translating languages, and even crafting poetry.

Following the success of GPT-3, OpenAI introduced its successor, GPT-4. This model further advanced the capabilities of LLMs, incorporating an even more extensive set of parameters and enhancing its capacity for understanding and generating human-like text. GPT-4 pushes the boundaries of what’s achievable with AI, promising new avenues for innovation and applications across diverse fields.

Nevertheless, despite these significant advances, utilizing the full potential of LLMs in complex reasoning tasks is still a formidable challenge. While LLMs can simulate human-like language generation, they may struggle with complex problem-solving or reasoning tasks that require a deep understanding of the subject matter, contextual awareness, and sophisticated logical inference. As such, we see emerging technologies aiming to bridge this gap. Among these are the Chain-of-Thoughts (CoT) and Tree-of-Thoughts (ToT) frameworks, offering unique approaches to maximize the capabilities of LLMs.

Section 2: Unraveling the Chain-of-Thoughts Framework

Imagine an orchestra where each instrument, while producing beautiful sound, plays independently of the others. The result would be cacophony rather than harmony. Now, visualize a conductor guiding each instrument, ensuring they play in sync, producing a symphony. The Chain-of-Thoughts (CoT) framework functions like this conductor, orchestrating the flow of interactions in Large Language Models (LLMs).

CoT represents a structured methodology to operate LLMs for complex reasoning tasks. The framework is designed around the idea of maintaining an ordered sequence of thoughts in a conversation, thereby ensuring the continuity and context that LLMs inherently lack.

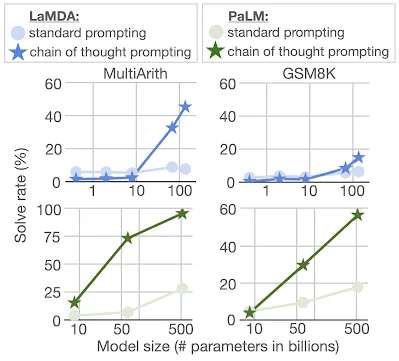

Figure 2. Comparison of CoT vs. Standard Prompting methods

The fundamental structure of the CoT framework comprises a large language model and a queue. The queue acts as a repository that stores the interaction history, including both the user’s inputs and the model’s outputs. As the conversation progresses, every new user query or model response is added to the queue, and the model then generates its response based on the entire queue’s content.

Essentially, CoT simulates a conversation where the model maintains an understanding of the past interactions, thus preserving the context and the flow. This proves valuable when the task requires a sequence of interactions, such as an ongoing dialogue, a story narration, or a complex problem-solving scenario.

Consider the scenario of a digital personal assistant that needs to maintain the continuity of a conversation with a user. Without a framework like CoT, the assistant would struggle to remember past interactions, making the conversation disjointed and frustrating for the user. However, with CoT, every new user query is considered in the light of the past conversation, enabling a coherent, logical interaction that elevates user experience.

While the CoT framework significantly enhances the functioning of LLMs, it is not without its limitations. Although it provides a basic structure to maintain context, it lacks the intricate control mechanisms needed for advanced problem-solving. This limitation is what catalyzed the emergence of the Tree-of-Thoughts (ToT) framework, a more sophisticated, intricate approach to harnessing the power of LLMs.

Section 3: The Tree-of-Thoughts Framework: A Step Further

Picture a bustling city intersection, with pedestrians, bicycles, cars, and buses all heading in different directions. Imagine the chaos if there were no traffic signals or traffic officers to guide and control the flow. The Chain-of-Thoughts (CoT) framework is somewhat like this city intersection without any controls. It enables interaction between user queries and model responses but lacks a precise direction or control mechanism to handle complex reasoning tasks. The Tree-of-Thoughts (ToT) framework, on the other hand, acts as the traffic signal or traffic officer in this city intersection, ensuring smooth, controlled, and efficient movement.

The ToT framework is an evolution over the CoT approach and involves a more complex control structure over Large Language Models (LLMs). It presents an intricate system of agents and modules that allow precise control over the model’s outputs, enhancing the capabilities of the LLM to deliver improved performance on complex problem-solving tasks.

ToT essentially constitutes four integral components: the large language model, a memory module, a prompter agent, and a checker agent. Together, these elements provide a structure where the LLM operates in harmony with its memory, prompter, and checker modules, resulting in a better structured and effective conversation.

The memory module functions as the model’s short-term memory, holding the essential details from the ongoing conversation. Unlike CoT, where context is maintained by preserving the history of interactions, the ToT’s memory module retains only relevant information, eliminating the noise of irrelevant details.

The prompter, acting as the traffic officer in our city intersection analogy, guides the LLM’s outputs based on the task at hand and the information stored in the memory module. It formulates the best possible prompts to the LLM, thereby steering the conversation in a fruitful direction.

The checker, meanwhile, plays a crucial role in validating the model’s outputs. Every time the LLM generates an output, the checker assesses it for validity in the context of the task. It acts as a filter, ensuring that only correct and relevant information gets passed on, thereby maintaining the quality and coherence of the conversation.

By incorporating these additional control mechanisms, the ToT framework significantly enhances the LLM’s capabilities and efficiency, making it a superior choice for more complex problem-solving tasks. As we move forward in this evolving landscape of artificial intelligence, the ToT framework could be a pivotal development that sets new standards in LLM-controlled applications.

Section 4: Comparison of Chain-of-Thoughts and Tree-of-Thoughts

To understand the prowess of the ToT framework and its superiority over the CoT, let’s draw from an orchestra’s metaphor. The CoT is akin to a group of musicians each playing their instrument, but without a conductor’s guiding hand. The result could be melodious at times but lacks the cohesion and direction that a conductor can provide. The ToT, on the other hand, introduces a conductor---the prompter and the checker---to this musical ensemble. It ensures that every note, every beat is in harmony, creating a symphony that is more than the sum of its parts.

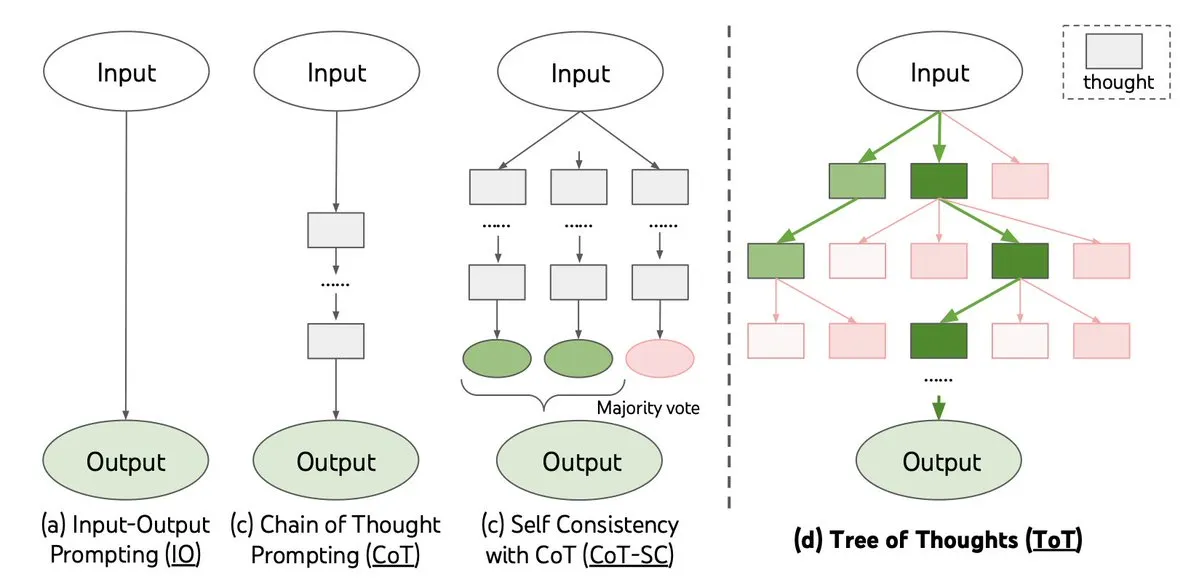

Figure 3. A comparison of logic flow of CoT vs. ToT

On a similar note, both CoT and ToT enable the LLM to generate responses, but the ToT does so with better control and structure. The inclusion of the prompter and the checker in the ToT ensures that the output generated by the model is not just a response but a valid and relevant one. It provides a more directed and goal-oriented approach towards problem-solving, making the ToT framework more efficient in handling complex reasoning tasks.

In a practical setting, both these frameworks were put to test on a series of Sudoku puzzles. The CoT and the ToT, each in their unique approach, significantly outperformed the traditional LLM-based solvers. However, the ToT framework showcased superior performance, solving all puzzles in the 3x3 benchmark set, and having an 80% higher success rate for 4x4 puzzles compared to the CoT-based solvers. This enhanced performance can be attributed to the ToT’s ability to maintain context and ensure the validity of the LLM’s responses.

The ToT’s introduction of the checker component, an element missing in the CoT, plays a pivotal role in this superior performance. By assessing the correctness of the LLM’s outputs, it ensures the quality and relevance of the conversation, making the solution search more efficient.

However, it’s crucial to note that the ToT’s current rule-based controller has certain limitations, such as its inability to determine whether a partially filled board can be completed without violating Sudoku’s rules. This might affect its efficiency in certain problem-solving scenarios. Researchers anticipate that a neural network-based ToT controller could potentially overcome this shortcoming, an avenue worth exploring in future developments.

While both CoT and ToT have their merits and applications, the latter presents a more sophisticated approach, leveraging additional components to provide superior control and direction to the LLM’s outputs. As the field of artificial intelligence continues to evolve, the ToT framework, with its potential for enhanced problem-solving capabilities, stands as a promising direction for future research and development.

Section 5: Implications and Future Developments

Building on the metaphor of the orchestra, it is clear that the introduction of the conductor — the Tree-of-Thoughts (ToT) framework — has allowed the ensemble of Large Language Models (LLMs) to perform in a more harmonious and efficient manner. This represents a significant advancement in the field of artificial intelligence, with potential implications that could ripple through various sectors of society and economy.

Let’s begin with the economic ramifications. The enhanced capabilities of AI models powered by the ToT framework can potentially boost efficiency in sectors such as customer service and technical support. By facilitating effective and contextually appropriate problem-solving, these models could lead to a reduction in human labor, driving down operational costs. Additionally, they could be leveraged in areas like data analysis and forecasting, where their improved reasoning capabilities could help businesses make more informed decisions.

On a societal level, the implementation of ToT-based LLMs could transform education. These models could be used to create interactive learning platforms that can adapt to individual student needs, creating a more personalized learning experience. Furthermore, they could be harnessed to create more sophisticated virtual assistants, making technology more accessible for those with disabilities.

However, alongside these potential benefits, there are also challenges to consider. As with any technological advancement, there is a risk of job displacement as AI models become more capable. This necessitates a careful consideration of how these technologies are implemented, with a focus on creating opportunities for re-skilling and up-skilling for those whose roles may be affected.

Moving forward, there are several exciting avenues for future development. One of the limitations of the current ToT implementation is that it uses a rule-based checker, which may not be easily adaptable to all problems. Future iterations could explore the use of neural network-based checkers, which may be better suited to handle a broader range of problems.

Furthermore, a significant area of exploration is the implementation of self-play training methods, popularized by AI systems like AlphaGo and AlphaStar. This approach, where an AI agent learns by competing against itself, could allow the ToT system to access a broader solution space, potentially leading to the development of novel problem-solving strategies. The implementation of a ‘quizzer’ module, which could generate its own problems to train the ToT controller and the prompter agent, could be a promising approach in this context.

Overall, the development and implementation of the ToT framework represent a significant milestone in the advancement of AI capabilities. However, the journey doesn’t stop here. As we continue to refine these models and explore new methodologies, we must remain cognizant of the potential implications, both positive and negative, to ensure that these technologies are deployed in a manner that is beneficial for all.

Section 6: Conclusion and Final Thoughts

Just as an orchestra is an evolving entity, with new compositions to explore and different musicians adding their unique contributions, so too is the field of artificial intelligence. The Large Language Models, acting as the musicians, have been provided with innovative tools — the Chain-of-Thoughts (CoT) and the Tree-of-Thoughts (ToT) frameworks. These tools have allowed the language models to expand their potential, facilitating more complex reasoning and problem-solving capabilities akin to a harmonious symphony of intelligence.

In this detailed exploration, we have seen how these advanced AI frameworks have improved upon the capabilities of standard LLMs such as GPT-3, and how these improvements have been further enhanced in GPT-4. We have delved into the intricate mechanisms of the CoT and ToT frameworks, understanding how these systems effectively manage the task of controlling the LLM’s capabilities and guiding its responses. We have also seen their practical application in problem-solving tasks such as Sudoku puzzles, and discussed the potential implications and future directions of these advancements.

As AI continues to evolve, one thing remains certain — the field will continue to push the boundaries of what is possible, constantly exploring new compositions of intelligence. The development of the ToT framework and its application is just one example of this constant innovation, a promising avenue for pushing the capabilities of LLMs beyond what we currently know and understand.

However, in this symphony of progress, it’s crucial to maintain a balanced perspective. The advancements in AI bring with them a wave of opportunities — potential benefits to the economy, society, and several sectors such as education, customer service, and data analysis. At the same time, we must also confront the challenges that come with these advancements, such as potential job displacement and ethical considerations.

As we move forward, it’s crucial that the deployment of these technologies is carried out responsibly and ethically. It’s imperative that the potential benefits are harnessed effectively and that the challenges are addressed proactively. In this way, we can ensure that the symphony of AI progress plays a tune that is beneficial for all — a harmonious blend of advancement and responsibility.

In conclusion, the Chain-of-Thoughts and Tree-of-Thoughts frameworks are pivotal additions to the world of artificial intelligence. As we continue to refine and develop these models, we stand on the brink of a future where AI’s potential could be harnessed even more effectively. It’s a thrilling prospect, filled with opportunities and challenges in equal measure. As we stand poised at the precipice of this new era, the baton is raised, and the orchestra awaits — ready to play the next movement in the grand symphony of artificial intelligence.