In the Crosshairs: The Ethics and Politics of AI in Modern Warfare

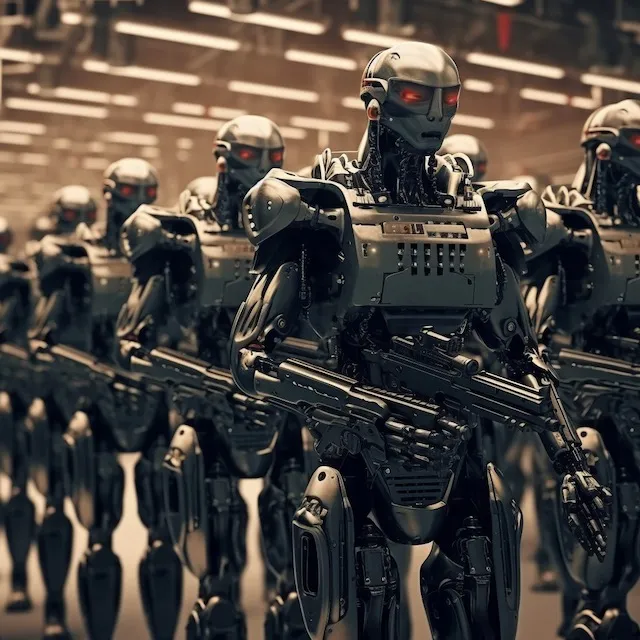

Artificial Intelligence (AI), with its capacity to learn, adapt, and execute at lightning speed, is increasingly permeating various sectors of society. Yet, as it encroaches on the age-old theatre of war, a Pandora’s Box of ethical, political, and philosophical conundrums is flung open. The decision-making, once the sole dominion of human judgement, now finds itself at the mercy of algorithms. And the question looms - can machines be entrusted with the grim calculus of warfare?

Inside the European Parliament, these issues are stirring passionate debates. Positions are polarised, with one camp viewing AI as a critical tool for defence modernisation, while the other raises the alarm on the potential risks of dehumanising conflict.

Key among these concerns is the challenge of programming AI systems to adhere to the principles of distinction and proportionality - the bedrock of international humanitarian law. In the heat of battle, can an algorithm discern a civilian from a combatant? And how does it calculate the acceptable level of collateral damage? The ramifications of mistakes are not lost on anyone, but the question of who would be held accountable for such errors is still very much in dispute.

The global landscape of military AI is complex and varied. The United States, a forerunner in this space, has launched Project Maven, an endeavour that aims to integrate AI into drone surveillance for enhanced image analysis. China’s pursuits are shrouded in mystery, but with its well-known prowess in AI, there’s no doubt it is making formidable advances in military AI.

Meanwhile, the European Union (EU) grapples with the challenge of reconciling strategic necessity with moral responsibility. A proposal for a blanket ban on lethal autonomous weapons systems stirred controversy, attracting both commendation for its ethical stand and criticism for potentially ceding strategic ground to rivals.

The EU’s approach to military AI illuminates broader geopolitical tensions. Advocates argue that AI is indispensable for European strategic autonomy, citing the need to keep pace with its American and Chinese counterparts. However, opponents see a chance for Europe to carve out a leadership role in formulating a global regulatory framework for military AI, thus setting ethical and normative standards for its use.

One proposed compromise is the ‘human-in-the-loop’ model, where AI serves as an advisory tool rather than a decision-maker. However, this model is not without its detractors, who question whether it merely provides a veneer of human control over fundamentally automated processes.

Another intriguing proposition is to instil AI systems with ethical reasoning capabilities, programming them to adhere to ethical principles. However, translating nuanced ethical norms into lines of code presents a formidable challenge. Even if we were to surmount that hurdle, there’s the added complexity of ensuring transparency and explainability in the AI’s decision-making process.

The deployment of AI in military operations is, therefore, not just a technological issue. It is a deeply political, ethical, and philosophical debate that transcends the confines of the battlefield. As Europe charts its course through this complex terrain, it confronts the existential question: should we entrust life-and-death decisions to machines?

The answers we arrive at will not only shape the future of warfare but redefine the essence of humanity in the age of intelligent machines. While our AI progeny may lack emotion, the decisions we make about their role in our world are fraught with emotional, ethical, and political weight.