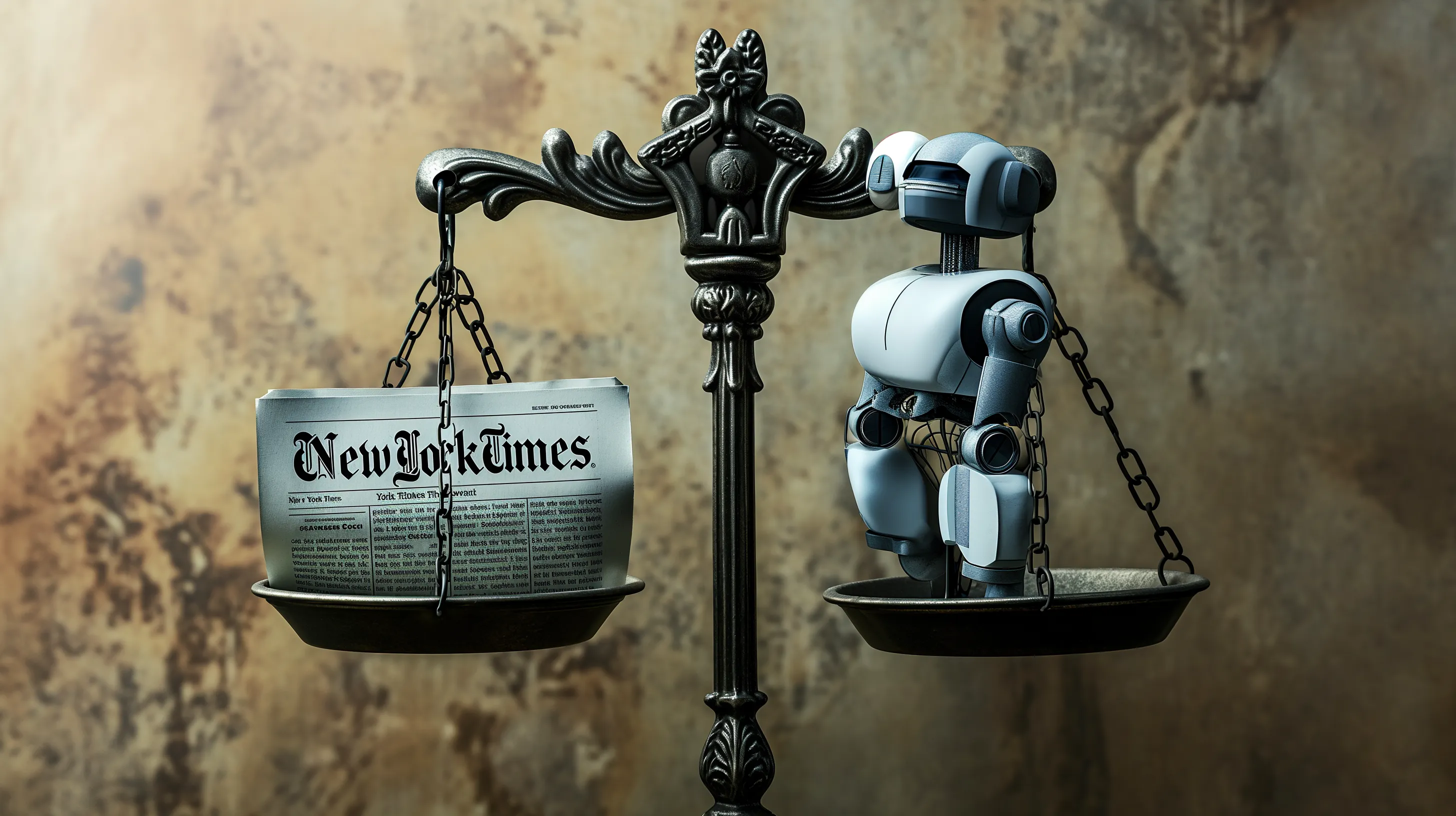

Don’t Stifle Artificial Intelligence Innovation

The New York Times fired a legal salvo by lodging a lawsuit against OpenAI and Microsoft for copyright infringement. The newspaper alleges the tech giants violated its intellectual property rights by using Times articles without permission to train AI models like ChatGPT. But this legal gambit seems an overreach that would, if successful, stifle AI innovation.

At issue is whether copying text to develop new technologies counts as “fair use” under copyright law. The Times claims it does not, because AI models like ChatGPT compete directly with the paper for readers and ad revenue. But the law has long recognised exceptions allowing unlicensed use of copyrighted material for research, commentary and other purposes. Without such carveouts, copyright risks impeding creativity and progress.

Training AI using vast pools of text seems a quintessential fair use. The tech firms are not republishing articles verbatim for commercial gain. Rather, machine learning algorithms churn through millions of examples to discern statistical patterns about how humans use language. The resulting AI generates original content and has transformative applications. Banning this research would be like forbidding authors from reading books when writing their own.

The Times also alleges harm, because ChatGPT siphons away traffic and subscriptions. But new inventions often disrupt old business models; consider how streaming killed CD sales. Newspapers themselves disrupted town criers. Technological change is no basis to handicap progress. And nothing stops the Times from deploying AI itself. Several competitors already use systems trained on OpenAI’s models.

Stifling AI might satisfy Luddites, but would impoverish society. Natural language processing drives everything from better search to voice assistants to automating drudgery. Blocking platforms like ChatGPT from using diverse sources of text would balkanise innovation, as researchers silo training data within legal boundaries.

The Times performed a public service by increasing public awareness of how AI technology works. But its legal action seems a self-interested attempt to protect its own franchise that, if successful, would injure the public interest. Researchers need wide latitude to advance AI that promises vast benefits. Courts should think twice before declaring such progress unfair.