California's AI Regulation Debate: Balancing Innovation and Safety

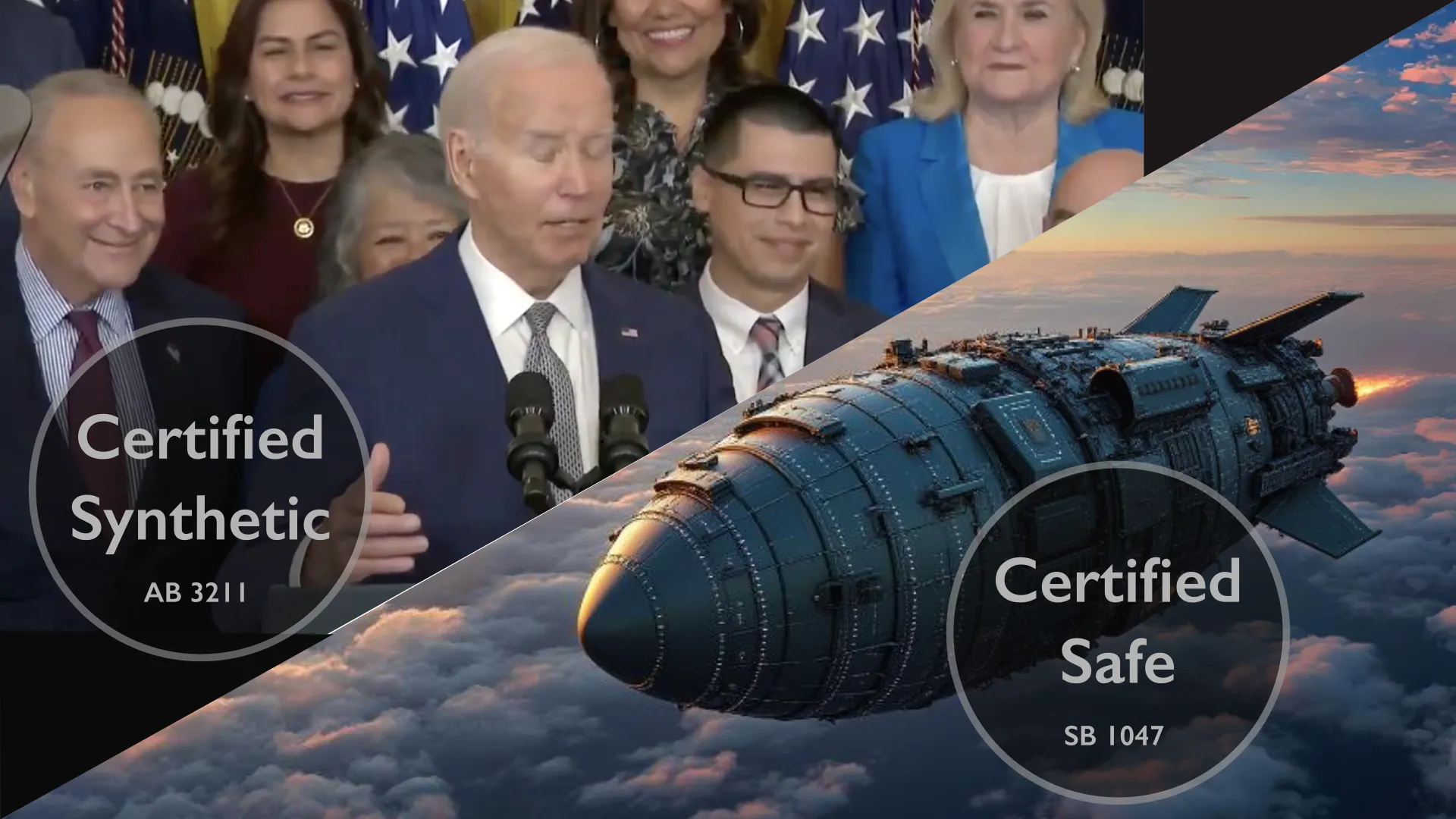

As artificial intelligence (AI) technologies rapidly evolve, so too does the legislative landscape attempting to govern their use. In California, two bills-Assembly Bill 3211 (AB 3211) and Senate Bill 1047 (SB 1047)-have emerged as focal points in the debate over how best to regulate AI.

Each bill seeks to address different concerns about AI’s potential to both benefit and harm society. AB 3211 focuses on transparency and authenticity, requiring AI-generated content to be clearly labeled, while SB 1047 mandates safety testing for certain AI models. As these bills navigate through the California legislature, they have ignited a debate that encapsulates the broader tension between fostering innovation and ensuring public safety.

The Merits of Transparency: AB 3211

AB 3211, also known as the California Digital Content Provenance Standards, aims to enhance transparency in the digital space. The bill requires that AI-generated content-be it photos, videos, or audio-be labeled with “maximally indelible watermarks” that clearly indicate the content’s synthetic nature. The rationale behind AB 3211 is straightforward: in an era where deepfakes and AI-generated misinformation can manipulate public perception and disrupt democratic processes, transparency is crucial.

The bill has garnered support from major tech companies such as OpenAI, Adobe, and Microsoft. These companies are part of the Coalition for Content Provenance and Authenticity, which advocates for standards that help distinguish between human-made and AI-generated content. The coalition’s support suggests a recognition that transparency is in the industry’s best interest, particularly in maintaining public trust.

The Pros of AB 3211

Proponents of AB 3211 argue that it serves as a necessary safeguard against the misuse of AI technologies. By requiring watermarks, the bill aims to prevent the spread of false information, particularly in sensitive contexts such as elections. As OpenAI’s Chief Strategy Officer, Jason Kwon, noted, “New technology and standards can help people understand the origin of content they find online and avoid confusion between human-generated and photorealistic AI-generated content.” In an election year, when misinformation campaigns can have significant consequences, the bill’s requirements could play a vital role in protecting democratic processes.

Moreover, the bill sets a precedent for AI accountability. By making it mandatory for companies to disclose when content is AI-generated, it could deter malicious actors from using AI to deceive the public. This aligns with broader calls for greater regulation of AI technologies, which many experts believe are necessary to mitigate risks associated with AI’s rapid adoption.

The Cons of AB 3211

However, the bill is not without its critics. Some argue that AB 3211 could stifle innovation by imposing burdensome requirements on AI developers and platforms. A trade group representing Adobe, Microsoft, and other major software makers initially opposed the bill, describing it as “unworkable” and “overly burdensome.” Although amendments have since softened their stance, concerns remain that the bill could create significant compliance costs, particularly for smaller companies that lack the resources to implement sophisticated watermarking technologies.

Another potential downside is the effectiveness of watermarks themselves. While watermarks may help in identifying AI-generated content, their utility largely depends on public awareness and the ability of users to understand and access watermarking information. Most internet users do not examine metadata, and unless the bill is accompanied by widespread public education campaigns, its impact could be limited.

The Quest for Safety: SB 1047

While AB 3211 focuses on transparency, SB 1047 addresses safety. This bill requires that developers conduct rigorous safety testing on certain AI models before they are deployed. The intention is to prevent AI systems from causing harm, whether through unintended behaviors or malicious use. SB 1047 has faced significant opposition from the tech industry, including OpenAI, which argued that the bill could hinder innovation and lead to a “chilling effect” on AI development.

The Pros of SB 1047

Supporters of SB 1047 contend that safety testing is a logical step in the responsible development of AI. As AI systems become more sophisticated, the risks associated with their misuse-such as the potential for autonomous weapons or AI-driven cyberattacks-increase. By mandating safety testing, SB 1047 aims to identify and mitigate these risks before they can cause real-world harm. This proactive approach is crucial in ensuring that AI technologies do not outpace regulatory frameworks and societal safeguards.

The bill also reflects a growing consensus among AI ethicists and researchers that AI development must be accompanied by rigorous oversight. Given the high stakes involved, many believe that waiting until after an AI-related incident occurs is not an option. SB 1047’s requirements could serve as a model for other jurisdictions grappling with the challenges of AI governance.

The Cons of SB 1047

Despite its intentions, SB 1047 has been met with resistance from the tech community. Critics argue that the bill’s requirements could impede innovation by making it more difficult and costly for companies to develop and deploy AI systems. There is also concern that the bill could create a competitive disadvantage for California-based companies, which would face more stringent regulations than those in other states or countries. This could lead to an exodus of AI talent and investment, ultimately harming California’s status as a tech hub.

Additionally, the bill’s opponents argue that it may not effectively address the real challenges associated with AI safety. While safety testing is important, it is not a panacea. AI systems are inherently complex and unpredictable, and no amount of pre-deployment testing can account for every possible outcome. Moreover, the rapid pace of AI development means that models can quickly become obsolete, potentially rendering the results of safety tests irrelevant.

The Path Forward

As AB 3211 and SB 1047 make their way through the California legislature, they highlight the broader debate over how best to regulate AI technologies. Both bills aim to address legitimate concerns about transparency and safety, but they also reflect the tension between fostering innovation and ensuring public protection. Striking the right balance will be crucial not just for California, but for the world as it grapples with the promises and perils of artificial intelligence.

While AB 3211’s focus on transparency seeks to build public trust and prevent misinformation, its impact will depend on effective implementation and public education. Meanwhile, SB 1047’s emphasis on safety testing underscores the need for proactive regulation, but also raises questions about the potential costs to innovation and competitiveness.

Ultimately, the success of these bills will depend on their ability to adapt to the rapidly evolving AI landscape. As policymakers consider these and other regulatory measures, they must navigate a complex array of factors to ensure that AI technologies develop in ways that benefit society while minimizing harm. The road ahead is uncertain, but one thing is clear: the conversation about AI governance is only just beginning.